Simplifying Shipping with Generative AI

At Shippo we’re always on the lookout for creative ways to use emerging technology to gain more leverage over our day-to-day tasks. Since the release of ChatGPT-3 last November, and the resulting Cambrian explosion of activity around generative AI, we couldn’t help but get a little excited about the potential to improve how work gets done.

The Opportunity

First, some context. As a multi-carrier shipping platform, Shippo maintains partner relationships with a vast network of regional and international carriers. Each carrier in-turn has its own set of tracking capabilities, service levels, coverage areas, APIs, insurance offerings, and extras. As we occupy the layer in between our customers – typically e-commerce merchants and platforms – and the carriers, it’s our job to keep on top of all these details. As you might imagine, this is no small feat.

Our team, the intelligence team, knew there was an internal slack channel where questions about these carrier capabilities were frequently asked – often on behalf of our customers – by our fellow Shippo employees. We also knew that our incredible fellow shipping experts and customer service reps were spending many hours monitoring the questions in this channel and – often after considerable research – responding with detailed answers. Further, we had an intuition that we could free up time for those both asking and answering these questions through the use of intelligent automation. Not content to rely on our intuition alone, we did a quick exploratory data analysis to gather the information needed to begin validating our assumptions. Here is what we found:

- Shippo employees asked 625 questions in 2022 (avg 1.7 per day)

- 541 of the 625 questions asked had at least 1 answer (87%)

- Questions that were answered had a median of 4 replies

- 25% of the questions that were answered took more than 2.92 days to get to a final resolution.

With a ballpark range of around 10,000 and 130,000 minutes being spent on this activity in 2022 alone, we had little doubt this problem was costly and real. Even if our new solution could only answer 10% of the questions asked – a conservative estimate given our ambitious goals, that would still be between 1000 and 13,000 minutes of total time saved each year that our colleagues could spend on higher value activities. Furthermore, as a company that takes pride in our incredible customer service, we figured that if we could answer even a few of the questions asked instantly that previously might have taken days – it would yield a big improvement in employee productivity and the customer experience.

Initial Analysis

Here are examples of the sorts of questions we would routinely see asked in our internal carriers slack channel:

“Can anyone confirm if DHL Express Domestic Express Doc is supported for cross-country shipments within EU?”

“Which carriers in Australia support multi-piece shipments through their API?”

Empirically it seemed like questions often exhibited some variation of the following two patterns:

- Does carrier X support capability B?

- Which carriers support capability A in region T?

Historically, answering these sorts of questions often required consulting a spreadsheet called the carrier capabilities matrix. The layout of this spreadsheet represented rows as carriers, and columns as capabilities and areas supported by that carrier. It looked a bit like this:

With 70+ carriers supported and 80+ capabilities and regions being tracked, even simple questions about a single carrier and capability required up to 6000 individual data points to be analyzed, filtered, and sorted.

Getting Started

Armed with some data, a clear understanding of the problem, and a healthy dose of excitement – we jumped in and started building an LLM powered slack-bot we named PARCEL – Personal Assistant for Routing, Carriers, and Express Logistics. The initial goal with PARCEL was to answer a subset of the questions being asked in our internal carriers slack-channel in real time and with reasonable accuracy. Fast forward about one-month – an eternity in such a rapidly evolving space – and we have no shortage of insights to share.

Prompt Engineering vs Fine-Tuning

Though the exact details of OpenAI’s most recent training datasets are not publicly known, it’s well established that earlier versions of GPT-3’s training set included Common Crawl “a corpus [that] contains petabytes of data collected over 12 years of web crawling”. Given this, we knew that OpenAI’s foundation LLMs were reasonably well-generalized to answer a wide variety of questions. After some initial prompt experimentation, however, we quickly discovered that the foundation models had relatively few deep insights about the shipping industry and about our business domain.

Giving PARCEL access to domain-specific knowledge became the first challenge we needed to overcome. Our first intuition was to address this problem by using OpenAI’s fine-tuning APIs. According to OpenAI, “Once a model has been fine-tuned, you won’t need to provide examples. in the prompt anymore.” In principle this sounded like an ideal solution. In practice however, we quickly ran into some problems:

- Lack of quality data – Our initial corpus of domain-specific facts was only a few thousand documents and somewhat repetitive on account of being synthetically generated from a tabular dataset. We had no easy way of determining how representative and generalized our data was as compared to the questions our real users would ask in the future. This meant that as soon as we deviated from our list of contrived test questions, the quality of the answers declined dramatically.

- Slow Iteration Speed – Fine-tuning a model requires a series of steps including gathering/generating data, generating vector embeddings, and many subsequent rounds of regression testing. As we were still heavily in exploration and discovery mode, we found that the process of repeatedly fine-tuning our models slowed down our team’s ability to iterate quickly.

- Difficult to Add New Information – We were constantly experimenting with new prompt templates, knowledge retrieval techniques, and information summarization methods. Retraining the model every time we wanted it to be aware of some new information or method became impractical.

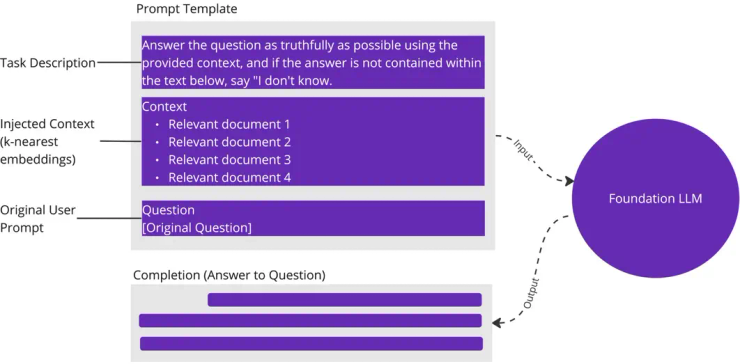

Fortunately, we quickly discovered another technique – retrieval augmented generation – that replaces fine-tuning with document retrieval and a bit of clever prompt engineering. Retrieval augmented generation allowed us to insert relevant snippets of text from our prepared corpus into a dedicated “context” section of each prompt. Using this method, the LLM could easily leverage new information to answer questions without needing to be retrained or fine-tuned. We could now rapidly experiment with new sources of information with minimal risk.

Key Insight: Use fine-tuning to train an LLM to perform a new task and use retrieval augmented generation to give it access to new knowledge. For greenfield projects not yet in production with real human users, consider avoiding fine-tuning altogether, absent a compelling reason related to your use-case.

Balancing Your Token Budget

According to OpenAI, “tokens can be thought of as pieces of words. Before the API processes the prompts, the input is broken down into tokens”. By analogy, tokens are to prompts as a bank account balance is to making a purchase. Managing your token budget effectively is key to generating great results. Here are a few learnings about token budgeting we picked up on our journey:

- Limit tokens allowed by users for input prompts. This gives you, the developer, more flexibility and control over how many tokens are available for injecting context and thus the length and quality of the resulting answer.

- Avoid budgeting too few tokens for the response as this can lead to incomplete or nonsensical answers.

- Different foundation models often use different tokenizer encodings and specific prompt-templates which can impact token counts. Review these carefully if you change models to avoid running into errors and performance issues.

- Consider enforcing a maximum token limit for injected context to avoid excessive costs for performing inference.

We ultimately came up with the following set of formulas for budgeting the tokens on each inference call to our LLM, which you are free to experiment with:

- w = x + y

- z = a – w

- T = w + z

- w = tokens for everything in the prompt template

- x = tokens for the task description and injected context

- y = tokens for original prompt from user

- z = tokens for the prompt completion (aka answer)

- a = max tokens for each call to the LLM in aggregate – shared between prompt and completion and usually set by the foundation model provider.

- T = Total tokens used per call for inference

Key Insight: It is often said that “A budget tells us what we can’t afford, but it doesn’t keep us from buying it.” When building LLM powered apps, thinking about your token budget early can help ensure the right balance between answer quality and inference cost.

Thinking about Performance

Response latency can be defined as the time it takes for a request to be processed and a complete response to be returned. This is a critical factor when building LLM-powered apps. Overlooking this metric may lead to a poor user experience and a reduction in system throughput. A few of our learnings here:

- Avoid chaining multiple calls to an LLM when possible. If call-chains are necessary, consider limiting the number of calls to two or less per input prompt.

- When multiple calls are needed to an LLM in a latency-sensitive environment, consider using conventional NLP techniques to deconstruct the original prompt into multiple separate prompts which can be resolved in parallel instead of sequentially.

- Longer prompts tend to take longer for the model to respond to. When practical, consider limiting context and prompt sizes to keep performance snappy.

- Always give your users some sort of visual indication that the system is working on an answer so they don’t get impatient.

- Popular algorithms for queries with word embeddings include cosine similarity and euclidean distance. These work well, but quickly get computationally expensive as the size of your information corpus grows. Time these queries and know when it’s necessary to move your query workload to optimized infrastructure like a specialized embedding database or pre-indexed neural-search framework.

Key Insight: Think carefully about using techniques like compound question splitting, multi-step summarization, and chain-of-thought prompting. Improvements to answer quality may come at the expense of system performance and perceived user experience.

Semantic Search

Semantic search builds upon keyword and fuzzy-matching techniques from earlier generations of search technology by adding in the use of word embeddings. Embeddings are numerical vectors that represent the underlying meaning of a piece of text and can be used to quickly find highly relevant search results. Current state-of-the-art techniques for question answering and knowledge retrieval often combine semantic search with the power of generative AI to deliver precise answers over a large corpus of frequently changing information.

A few of our team’s learnings:

- Semantic search query results are often scored by a relevance metric. Consider experimenting with a relevance floor threshold to avoid confusing the LLM by stuffing lots of potentially irrelevant information into the context window.

- Split your query retrieval and ranking algorithms into discrete steps for more fine-grained control over performance and relevance tuning.

- Consider using different corpora to answer different types of questions to get the highest quality answers in the shortest amount of time.

- Because LLMs are trained on a large corpus of unstructured text, they tend to be a bit easier to integrate with textual datasets than structured datasets.

Key Insight: Combining semantic search techniques with generative AI is a powerful and flexible method for many emerging use-cases. Experiment liberally with many variations of these technologies to arrive at a great solution to the problem you are solving.

Conclusion

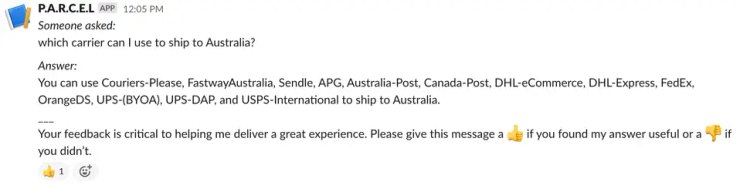

A month after we started working on PARCEL, we shipped our v1 into production. Here is an example of what answering questions about carrier capabilities looks like now:

It’s still early days for this project, but we are seeing promising signs of engagement and interest from our fellow Shippo employees. Perhaps, as importantly, we now have a real human feedback loop in place and no longer have to guess at what sort of questions people want answered. As we plan for the coming months, we can’t help but feel excited about the future potential of PARCEL and generative AI. With a bit of luck, perhaps we might even have some customer-facing product news to share soon :-).